Imagine being able to type a message, move a robotic arm, or restore lost speech—without touching a keyboard, screen, or even your voice. Just thought. A decade ago, that sounded like science fiction. Today, brain chip technology is no longer a distant idea tucked away in futuristic movies or speculative research papers. It’s real, it’s happening now, and it’s already changing lives in quiet but profound ways.

I first started paying close attention to brain chip technology while covering breakthroughs in neurotechnology and medical innovation. What struck me wasn’t the hype—it was the very human stories underneath it. Patients with paralysis learning to communicate again. People with neurological disorders gaining back a sense of control. Engineers and doctors working side by side, trying to decode the most complex system we know: the human brain.

This topic matters because it sits at the intersection of health, ethics, technology, and identity. Brain chip technology isn’t just about smarter devices; it’s about redefining how humans interact with machines—and possibly with each other. In this guide, I’ll walk you through what brain chip technology actually is, how it works, where it’s already being used, what tools and companies are leading the charge, common misconceptions, and what the future realistically looks like (beyond the headlines). My goal is simple: help you understand this technology clearly, honestly, and practically.

Brain Chip Technology Explained in Simple Terms

When people hear “brain chip,” they often imagine a tiny microchip dropped into the brain that suddenly makes someone super-intelligent. That’s not how it works—at least not today. Brain chip technology, more accurately called brain–computer interface (BCI) technology, is about creating a direct communication pathway between the brain and an external device.

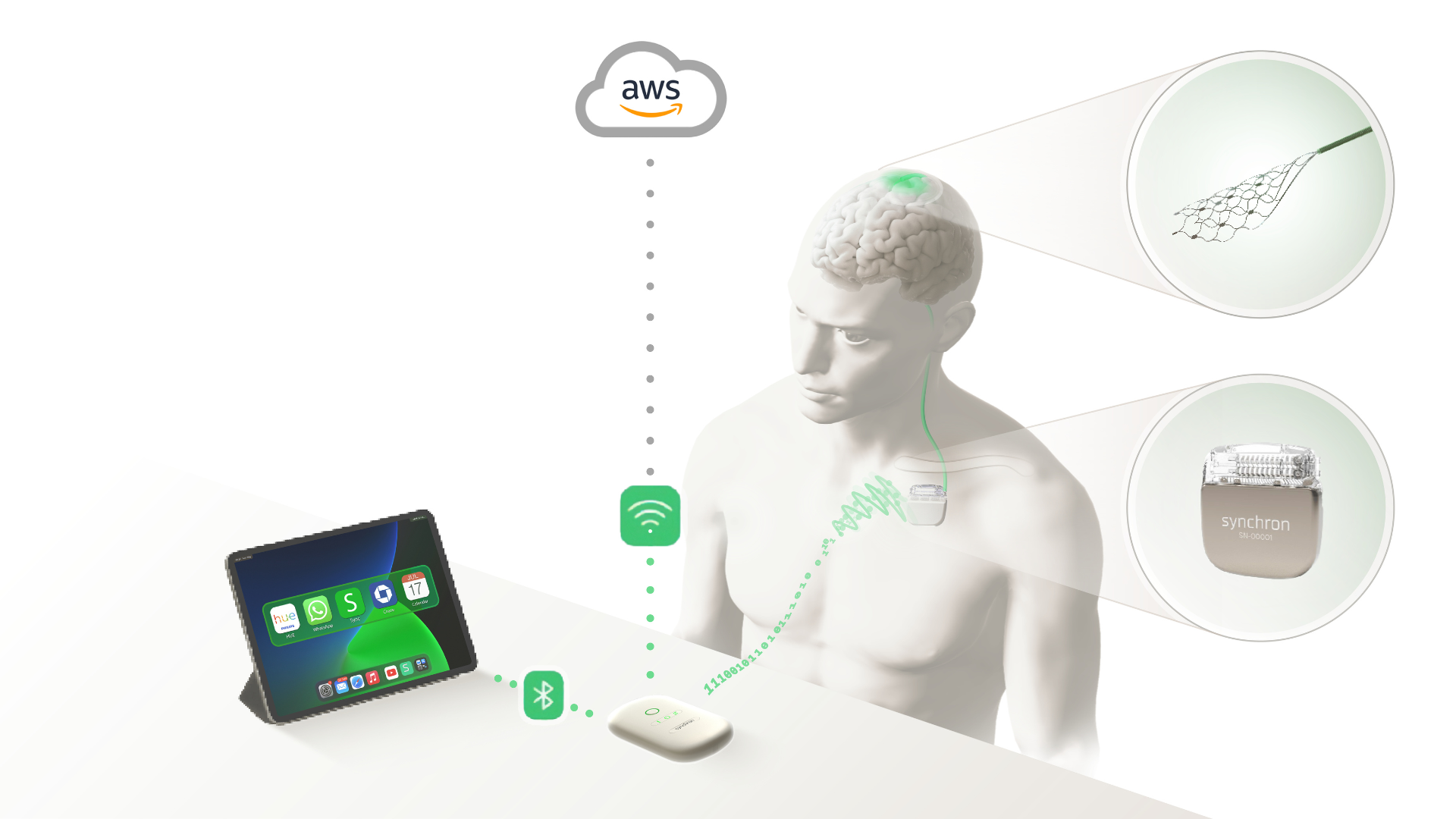

Think of your brain as a powerful biological computer that communicates through electrical signals. Every thought, movement, and sensation involves neurons firing in specific patterns. Brain chip technology works by reading (and sometimes stimulating) those signals. A chip or implant is placed in or near the brain, where it detects neural activity. That data is then translated by software into commands a computer can understand—like moving a cursor, selecting letters, or controlling a prosthetic limb.

A useful analogy is a microphone and translator. The brain chip “listens” to neural signals, the software “translates” them, and the connected device “acts” on them. Importantly, most current systems are read-only, meaning they interpret brain signals rather than inserting thoughts or information into the brain.

There are invasive and non-invasive forms of brain chip technology. Invasive systems involve surgically implanted chips for higher precision, while non-invasive systems use external sensors like EEG caps. Each has trade-offs in accuracy, safety, and long-term use. Understanding this distinction is key to understanding where the technology stands today—and where it’s going next.

How Brain Chip Technology Actually Works (Step by Step)

To really grasp brain chip technology, it helps to break the process down into clear stages. While implementations vary, most systems follow the same basic workflow.

First comes signal detection. Neurons communicate using electrical impulses. A brain chip uses electrodes to detect these impulses from specific brain regions. In invasive systems, electrodes sit directly on or within the brain tissue, offering extremely precise readings. In non-invasive systems, sensors sit on the scalp and pick up weaker signals.

Next is signal decoding. Raw brain signals are noisy and complex. Advanced algorithms—often powered by machine learning—analyze patterns in the data. Over time, the system learns that certain neural patterns correspond to specific intentions, such as moving a hand or selecting a letter.

Then comes translation into action. Once decoded, those intentions are converted into commands for external devices. This could mean moving a cursor, typing text, controlling a wheelchair, or activating a robotic limb.

Finally, some systems include feedback loops. The device sends information back to the user, often visually or through subtle stimulation. This helps users refine control, much like learning to use a new tool or instrument.

What’s important here is adaptability. Brain chip technology isn’t plug-and-play. Users train with the system, and the system learns the user. That mutual adaptation is what makes long-term use possible—and increasingly effective.

The Real Benefits and Use Cases of Brain Chip Technology

The most powerful applications of brain chip technology today are medical—and that’s where the real impact is already visible. For individuals with paralysis, spinal cord injuries, or neurodegenerative diseases, BCIs can restore lost abilities in ways that were once impossible.

One of the most meaningful use cases is communication restoration. Patients who cannot speak due to ALS or stroke can use brain chip technology to type messages using thought alone. That may sound simple, but for someone who hasn’t communicated independently in years, it’s life-changing.

Another major benefit is mobility and motor control. Brain chips can help users control prosthetic limbs or robotic exoskeletons. In clinical trials, patients have used BCIs to grasp objects, pour water, and perform everyday tasks. These aren’t lab tricks—they’re practical steps toward independence.

There’s also growing research into neurological disorder treatment. Conditions like Parkinson’s disease, epilepsy, and chronic pain may be managed through targeted brain stimulation combined with intelligent monitoring.

Beyond medicine, researchers are exploring human–computer interaction enhancements. Gamers, designers, and engineers may eventually use brain chip technology for faster, more intuitive control. While consumer applications are still early, the foundation is being laid now.

Step-by-Step Guide: What It Takes to Use Brain Chip Technology Today

Despite the headlines, brain chip technology is not something you casually buy and install. Current use involves structured clinical or research environments. Understanding this process helps separate reality from hype.

The journey usually begins with medical evaluation or research eligibility. Candidates are assessed for neurological condition, overall health, and suitability for implantation or testing. Ethical approval and informed consent are central here.

Next comes system selection. Doctors and researchers choose between invasive and non-invasive BCIs depending on the goal. High-precision motor control typically requires implants, while communication experiments may use non-invasive tools.

If invasive, surgical implantation follows. This is performed by neurosurgeons using imaging-guided precision. Recovery time varies, but modern techniques aim to minimize tissue damage.

Then comes calibration and training. Users spend weeks or months training the system. This phase is crucial. The brain adapts, the software learns patterns, and performance improves steadily.

Finally, there’s ongoing monitoring and adjustment. Brain signals change over time, so systems must be updated, recalibrated, and ethically supervised.

This step-by-step reality is far removed from instant superhuman upgrades—but it’s also far more meaningful and responsible.

Tools, Companies, and Platforms Leading Brain Chip Technology

Several organizations are shaping the future of brain chip technology, each with a distinct approach and philosophy.

Neuralink is perhaps the most widely known name. Its focus is on high-bandwidth implanted BCIs using ultra-thin electrode threads and robotic surgery. Neuralink aims to address paralysis, blindness, and neurological disorders, with long-term ambitions that include enhanced human–AI interaction.

BrainGate represents a more research-driven, academic approach. BrainGate has produced some of the most credible peer-reviewed results in BCI communication and motor control, often working closely with universities and hospitals.

Synchron takes a less invasive route, inserting electrodes through blood vessels rather than open brain surgery. This approach may lower surgical risk and broaden accessibility.

Free and non-invasive tools also exist, particularly in research and experimentation. EEG-based headsets allow developers to explore brain signal interaction, though with limited precision. Paid, implant-based systems offer higher performance but come with medical and ethical complexity.

Each option involves trade-offs between accuracy, risk, cost, and accessibility. There is no one-size-fits-all solution—yet.

Ethical Concerns, Risks, and Limitations You Should Know

Any honest discussion of brain chip technology must address its risks and ethical questions. These aren’t side issues; they’re central to responsible progress.

Surgical risks are the most obvious. Invasive implants carry risks of infection, inflammation, and long-term tissue response. Even with precision surgery, the brain is delicate, and unknowns remain about decades-long implantation.

Data privacy is another major concern. Brain data is deeply personal. Who owns it? How is it stored? Could it be misused? These questions don’t have easy answers, and regulations are still evolving.

There’s also the issue of access and inequality. If brain chip technology becomes powerful and expensive, who gets it? Medical necessity will likely come first, but enhancement applications could widen social divides.

Finally, there’s psychological adaptation. Users may experience identity shifts, frustration during training, or dependency on the system. Long-term mental health support is essential.

Understanding these limitations doesn’t diminish the technology—it grounds it in reality.

Common Mistakes and Misconceptions About Brain Chip Technology

One of the biggest mistakes people make is assuming brain chip technology is about mind control. It’s not. Current systems interpret neural signals; they don’t insert thoughts or control behavior.

Another misconception is that implants instantly work. In reality, learning to use a brain chip is like learning a new language or instrument. Progress comes with time and practice.

People also overestimate consumer readiness. While demos look impressive, widespread everyday use is still years away. The technology must mature medically, ethically, and socially.

Finally, many assume AI replaces the human brain. In truth, brain chip technology amplifies human intention—it doesn’t override it.

Recognizing these myths helps set realistic expectations and meaningful conversations.

The Future of Brain Chip Technology: What’s Actually Coming Next

Looking ahead, progress in brain chip technology will likely be gradual but transformative. Expect improved materials that reduce immune response, smarter decoding algorithms, and more adaptive systems that learn continuously.

Medical applications will expand first—speech restoration, vision support, and neurological treatment. Consumer applications may follow, but cautiously.

One promising direction is hybrid systems, combining brain chips with wearable tech and AI assistants. Rather than replacing existing tools, BCIs may quietly enhance them.

The future won’t look like instant telepathy or uploaded minds. It will look like people quietly regaining abilities they thought were lost forever—and that’s far more powerful.

Conclusion

Brain chip technology isn’t about turning humans into machines. It’s about building bridges—between thought and action, intention and expression, loss and possibility. When you strip away the hype, what remains is a deeply human story of resilience, curiosity, and care.

We’re still early in this journey, but the progress so far is real, measurable, and meaningful. If you’re curious, cautious, or even skeptical, that’s healthy. The best conversations around brain chip technology happen when excitement and responsibility move forward together.

If this topic sparked questions or ideas, I encourage you to keep exploring, follow credible research, and join the conversation. The future of brain chip technology isn’t just being built in labs—it’s being shaped by informed, thoughtful people.

FAQs

What is brain chip technology in simple terms?

Brain chip technology allows the brain to communicate directly with computers or devices using neural signals.

Is brain chip technology safe?

Current systems are carefully tested, but invasive implants carry surgical and long-term risks that are still being studied.

Can brain chips read thoughts?

They interpret specific neural patterns related to intent, not abstract thoughts or memories.

Who benefits most from brain chip technology today?

Patients with paralysis, speech loss, or neurological disorders see the most immediate benefits.

Are brain chips available to the public?

Mostly through clinical trials and research programs, not consumer retail.

Adrian Cole is a technology researcher and AI content specialist with more than seven years of experience studying automation, machine learning models, and digital innovation. He has worked with multiple tech startups as a consultant, helping them adopt smarter tools and build data-driven systems. Adrian writes simple, clear, and practical explanations of complex tech topics so readers can easily understand the future of AI.